Intel: P5 to Sandy Bridge and Beyond

posted by Shane on 23rd November 2010, at 10:08pmMost of you reading this will be unaware of a recent announcement by Intel stating that they will release the first batch of CPUs that use their new microarchitecture, codenamed “Sandy Bridge.” A microarchitecture can be thought of as how a CPU is designed from a hardware perspective, how it executes instructions, and what other features it provides. Sandy Bridge based CPUs will be released on January 5, 2011 at CES (Consumer Electronics Show). Typically OEM’s (Apple, Dell, HP, etc.) get the CPUs first with consumers gaining access sometime in February. A new microarchitecture typically brings performance enhancements and new low level chip features. In light of this announcement I figured I would give a rundown of how Intel advances their CPU lineup.

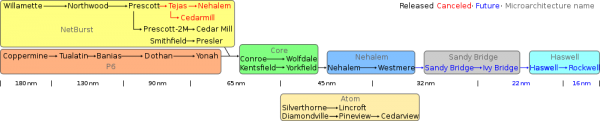

From 1993 until 2006 Intel advanced their CPU technology by focusing almost exclusively on clock speed, the mega-hertz (MHz) and giga-hertz (GHz) speeds we all know. During this time span of roughly 13 years Intel utilized three microarchitectures. These architectures were P5 (i586), P6 (i686), and NetBurst (unofficially, i786). P5 included chips such as Pentium and Pentium Pro, P6 included chips like the Pentium II and Pentium III, and finally NetBurst included chips like the Pentium 4. Now you’re wondering, where does Pentium M come in? Pentium M was actually a P6 based chip, more on this later. It should be mentioned that names like P5, P6, NetBurst, and Pentium have no technical meaning; these names are just codenames.

The first P5 architecture chip (the first Pentium as well) had a whopping 60MHz clock speed. Moving forward 18 months the clock speed increased to 75MHz. By mid-1996 our CPUs were running at 200MHz, approximately 10% of what current CPUs run at. by the time the P5 architecture had run its course it was running at 300MHz. The Pentium II processors had overlap in terms of clock speed starting around 233MHz to about 500MHz. The Pentium II processors were released from May 1997 through 1998. Under the same P6 architecture came the Pentium III running between 450MHz and 1.4GHz at the top end. Pentium III CPUs were released in May 1999 with a last release date of April 2001.

The next stage for Intel was the NetBurst architecture. NetBurst was an entirely new architecture which brought new features to the x86 type CPU. Accompanied with this new architecture came an increase in performance. The main benchmark for increasing performance with NetBurst was increasing clock speeds. The first batch of Pentium 4 CPUs shipped in January 2001 and the last shipped in Q1 2006. Later this style of CPU also included the dual core version, the Pentium D. Pentium 4 CPUs were still being sold as a budget CPU after the Core model had taken off. You will probably remember that there was a time when you would get a new computer with a faster CPU and you would feel a difference right away in terms of whatever work you would be doing; this was due in part to the increasing raw speed (MHz and GHz) and the changed microarchitecture. This propelled the NetBurst architecture forward but Intel hit a wall that caused the cancellation of three NetBurst based CPUs.

Any time a company has to cancel a planned product it is for a very good reason. As I mentioned Intel had planned to have another 3 CPUs based on the NetBurst architecture. The CPUs based on NetBurst continued as expected until the “Northwood” CPU delivering increased power and performance. After Northwood Intel released another CPU named “Prescott.” Many industry experts who watched Intel wondered why Prescott was a member of the NetBurst family seeing as it had radical design changes. These changes resulted in the CPU using more power and ultimately generating more heat. This was the wall that Intel hit in regards to clock speed, it was no longer feasible to continue to increase clock speed. The last Prescott CPU released hit an astounding 3.8 GHz, the fastest CPU Intel ever made. Unfortunately with this very high clock speed there was less than a 20% increase in performance when Prescott was compared with Northwood. Prescott CPUs also used more power and generated more heat than their older Northwood relatives to perform the same tasks. This resulted in Intel performing a complete 180 and canceling the remaining NetBurst based CPUs.

The first way Intel addressed this problem resulted in changing the way CPU design would be managed. Rather than focusing on clock speed, Intel adopted the “tick-tock” system. The tick-tock system stated that at each tick a new microarchitecture would be used and at each tock that microarchitecture would receive a die-shrink; a die-shrink is a decrease in the physical manufacturing process used in creating the chip. For example, the first Core based CPUs at the “tick” were manufactured using a 65nm (nano-metre) process and then a year later at the “tock” the same style of CPU was manufactured using a 45nm process. Die-shrinks allow a CPU to achieve the same performance using less power and generating less heat, this in turn allows clock speeds to be increased which increases performance further. This is the current model Intel uses for CPU evolution.

The second way Intel addressed their grave problem was to go back to the drawing board completely. Rather than iterating NetBurst to be more efficient, everything in NetBurst was scrapped. The new Core microarchitecture was much more like a P6 based Pentium M or Pentium III than a NetBurst Pentium 4. Thankfully this radical shift based on the P6 architecture worked propelling Intel ahead and possibly contributed to their “leap ahead” campaign. With the introduction of the Core architecture Apple adopted Intel’s new fast and cool Merom mobile chips for their notebooks and consumer desktops. Core brought many advancements to Intel’s CPU line, more than were achieved during the NetBurst years. The successor to Core, Nehalem, continued to evolve Intel’s CPU line with more advanced features such as on-chip memory controllers and on-chip graphics processing.

In summary, Intel addressed the failure of NetBurst by using the “tick-tock” system which utilized new microarchitectures and increased performance with die-shrinks. Intel focused on evolving the older P6 architecture rather than NetBurst. The new way of doing things also de-emphasized clock speed, this is why an Arrandale (mobile codename) Core i5 at 2.4GHz is more powerful than a 2.4GHz Penryn (mobile codename) Core 2 Duo. This shift allowed Intel to produce efficient chips that used smaller amounts of power and generated less heat. This will be the future of development until another important barrier is reached which I will discuss at the end of the article.

This brings us to now and the upcoming release of Sandy Bridge in January. Using what we have learned so far we know that:

- Sandy Bridge is a new microarchitecture.

- Since we are dealing with a new microarchitecture we are currently in the “tick” phase.

- There will be a “tock” sometime in the second half of 2011 that will increase clock speeds and give a small performance boost.

Sandy Bridge will start with a 32nm fabrication process and later be shrunk to 22nm at the tock. Sandy Bridge will continue to sport integrated memory controllers and on-chip graphics with increased performance. Sandy Bridge will also bring increased efficiency with floating point calculations (decimal operations, often used for scientific calculations). The shift Sandy Bridge will bring is not going to be as revolutionary as Nehalem but is still a reason to wait to upgrade.

I invite you to take a look at Intel’s roadmap:

http://en.wikipedia.org/wiki/File:IntelProcessorRoadmap.svg

From the roadmap it is clear that Intel is set until about 2013 at their present rate of development. Haswell is slated for release in 2013 with Rockwell following. What happens after the 16nm stage is not clear. Intel will have to find another way to innovate in the same way they did after the NetBurst failure. For anyone familiar with the realm of particle physics they will know that at molecular distances of less than 10nm the rules of nature no longer apply! These rules start to break down because we enter the realm of Quantum Mechanics. From my point of view Intel has two choices:

- Figure out how to work with the unpredictability that can occur at an 11nm process and build one more solid traditional CPU. This CPU would then have to be extended to have as many processing cores as possible within the given power and heat constraints. The multi-core approach would require operating systems to be re-designed and focus developer training on parallel programming.

- The second choice and more likely in my view since Intel is a business needs to turn a profit this option would be to venture into the realm of Quantum Computing. In 2010 Quantum Computing is still theoretical for the most part with the exception of one Quantum Computer in Vancouver. Quantum Computing would bring a major increase in computing power which could be likened to achieving warp drive. Both in the speed increase and that is is pure theory at this time.

We are on the edge of a computing revolution. The amount of computing power to be harvested in the next 5 to 10 years is astounding. With this being said I invite everyone to look forward to the future and the enhancements we will see. I am personally holding out on upgrading my gaming computer until Haswell (currently running quad core Yorkfield) at the earliest unless I have some random power spike from my power supply again ;).

If you have any questions please do send me a private message on the forums!